The European Economic Area gets an end to all that nagging

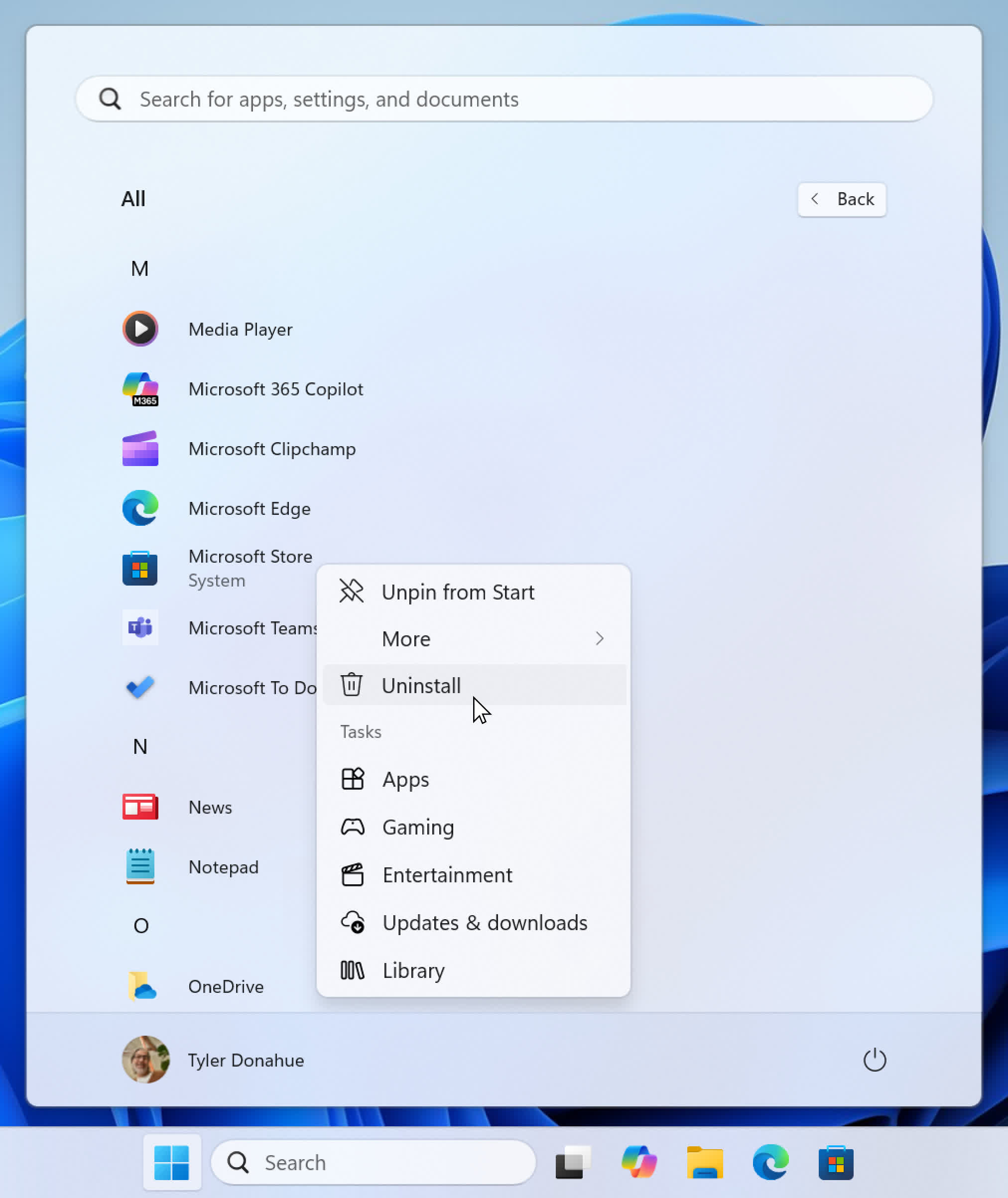

Ability to uninstall the Microsoft Store in the EEA.

- Microsoft is changing Edge for the better in the European Economic Area

- This is happening due to the Digital Markets Act in that region

- It means Edge will stop nagging to be the default browser

Microsoftis relenting with its constant prompting of folks to use theEdge browserunder Windows 11, but not everyone is getting this welcome relief.

Sadly, the pushing of Edge in some notable ways is only being curtailed in the European Economic Area (EEA) due to rules imposed by the Digital Markets Act. With no such regulatory pressure in the US or elsewhere, these restrictions on Edge aren’t happening.

TechSpot noticedMicrosoft’sblog postintroducing these various changes, the key one being that Edge will stop annoying you to set it as the default browser inWindows 10andWindows 11. It’ll only prompt a request to be your go-to app for web browsing if you open Edge directly, and this feature has already been implemented as of the end of May, with version 137.0.3296.52 of the browser.

Another change is that when Edge is uninstalled, you won’t get other Microsoft apps telling you to reinstall it.

Furthermore, when you set anyweb browseras your default choice, it’ll have a whole lot more file formats (and link types) tied to it, rather than having Edge still open some file types. This stood as another sneaky way to get Edge back on your radar after you’ve chosen to give it a wide berth.

Additionally, when using the Windows search box and clicking on a web search result, it will be opened in your chosen default browser, rather than Edge, inBing.

All these changes should be in place soon, and will be rolling out in June in the EEA, save for the main one, which is already in place as noted.

Away from Edge, another potentially sizeable plus point for Windows users in this region is that they’ll be able to uninstall theMicrosoft Store, should they wish. That move won’t be coming until later in 2025, though.

Microsoft clarifies that if you remove the Microsoft Store, but have already used it to install some apps, that software will continue to receive updates in order to ensure it gets the latest security patches, which is good news.

>>>MQ20 Battery for Microsoft Surface Pro 9 2032 1996 1997 2038

Analysis: Give us all a break, Microsoft

These are welcome moves for Windows 11 and 10 users in this region, but it’d be nice if Microsoft could implement them elsewhere as well. However, without the relevant authorities breathing down the neck of the software giant, it won’t do anything of the sort. All these behaviors will persist outside the EEA because Microsoft clearly believes they may help drive more users to Edge.

When in fact they are more likely to drive people up the wall.Repeatedly insistingthat folks should use Edge inscenarios of varying degrees of overreachis a tiresome policy, as is popping up Edge whenever possible, rather than using the default browser choice. That should always be the default; no matter what’s happening, the clue is in the name, Microsoft.

>>>G3HTA056H Battery for Microsoft Surface Pro X 13″ Tablet

Don’t expect Microsoft’s overall attitude to change anytime soon. Still, at least some people will get to enjoy a slightly less nag-laden experience in Windows 11, and fewer instances of Edge clambering onto their monitor screen in a bid to remind them that it exists. However, the majority of us can doubtless expect more pop-ups and general weirdness in terms of some of the moreleft-field efforts Microsoft has madeto promote its browser.